- #AIRFLOW ETL DIRECTORY HOW TO#

- #AIRFLOW ETL DIRECTORY INSTALL#

- #AIRFLOW ETL DIRECTORY PLUS#

- #AIRFLOW ETL DIRECTORY DOWNLOAD#

Sql/create_table.sql sql="""CREATE TABLE IF NOT EXISTS twitter_etl_table( You'll also need to import the extract and transform Python files.įrom import PythonOperatorįrom _operator import PostgresOperator Start by importing the different Airflow operators.

#AIRFLOW ETL DIRECTORY HOW TO#

Restart the webserver, reload the web UI, and you should now have a clean UI: Airflow UI How to Use the Postgres Operator load_examples = False Disable example dags In the airflow.cfg config file, find the load_examples variable, and set it to False. The Airflow UI is currently cluttered with samples of example dags. Change this to LocalExecutor: executor = LocalExecutor Airflow DAG Executor Inside the Airflow directory created in the virtual environment, open the airflow.cfg file in your text editor, locate the variable named sql_alchemy_conn, and set the PostgreSQL connection string: sql_alchemy_conn = Airflow executor is currently set to SequentialExecutor. Once completed, scroll to the bottom of the screen and click on Save. Click on test to verify the connection to the database server.

#AIRFLOW ETL DIRECTORY PLUS#

Click on the plus sign at the top left corner of your screen to add a new connection and specify the connection parameters. You should already have apache-airflow-providers-postgres and psycopg2 or psycopg2-binary installed in your virtual environment.įrom the UI, navigate to Admin -> Connections. Airflow UI showing created dags How to Set Up a Postgres Database Connection Search for a dag named ‘etl_twitter_pipeline’, and click on the toggle icon on the left to start the dag. Open the browser on localhost:8080 to view the UI. Then start the web server with this command: airflow webserver Start the scheduler with this command: airflow scheduler With a dag_id named 'etl_twitter_pipeline', this dag is scheduled to run every two minutes, as defined by the schedule interval. Start by importing the different airflow operators like this: from airflow import DAGįrom import EmptyOperatorĭescription="A simple twitter ETL pipeline using Python,PostgreSQL and Apache Airflow",

#AIRFLOW ETL DIRECTORY INSTALL#

Install the provider package for the Postgres database like this: pip install apache-airflow-providers-postgres How to Set Up the DAG ScriptĬreate a file named etl_pipeline.py inside the dags folder. If this fails, try installing the binary version like this: pip install psycopg2-binary Install the libraries pip install psycopg2 You should have PostgreSQL installed and running on your machine. To store your data, you'll use PostgreSQL as a database. # perform data cleaning and transformationĬontents of transform.py file The DatabaseĪirflow comes with a SQLite3 database. Postgres_sql_upload.bulk_load('twitter_etl_table', data) Postgres_sql_upload = PostgresHook(postgres_conn_id="postgres_connection") Tweets_df = pd.DataFrame(tweets_list, columns=)įrom _hook import PostgresHookĭata = data.to_csv(index=None, header=None) Tweets_list.append([tweet.date,, tweet.rawContent, Inside the Airflow dags folder, create two files: extract.py and transform.py.Įxtract.py: import as sntwitterįor i,tweet in enumerate(sntwitter.TwitterSearchScraper('Chatham House since:').get_items()): Make sure your Airflow virtual environment is currently active. You will also need Pandas, a Python library for data exploration and transformation. Numerous libraries make it easy to connect to the Twitter API. To get data from Twitter, you need to connect to its API. Tons of data is generated daily through this platform. Twitter is a social media platform where users gather to share information and discuss trending world events/topics.

Airflow development environment up and running.Apache Airflow installed on your machine.

To follow along with this tutorial, you'll need the following:

#AIRFLOW ETL DIRECTORY DOWNLOAD#

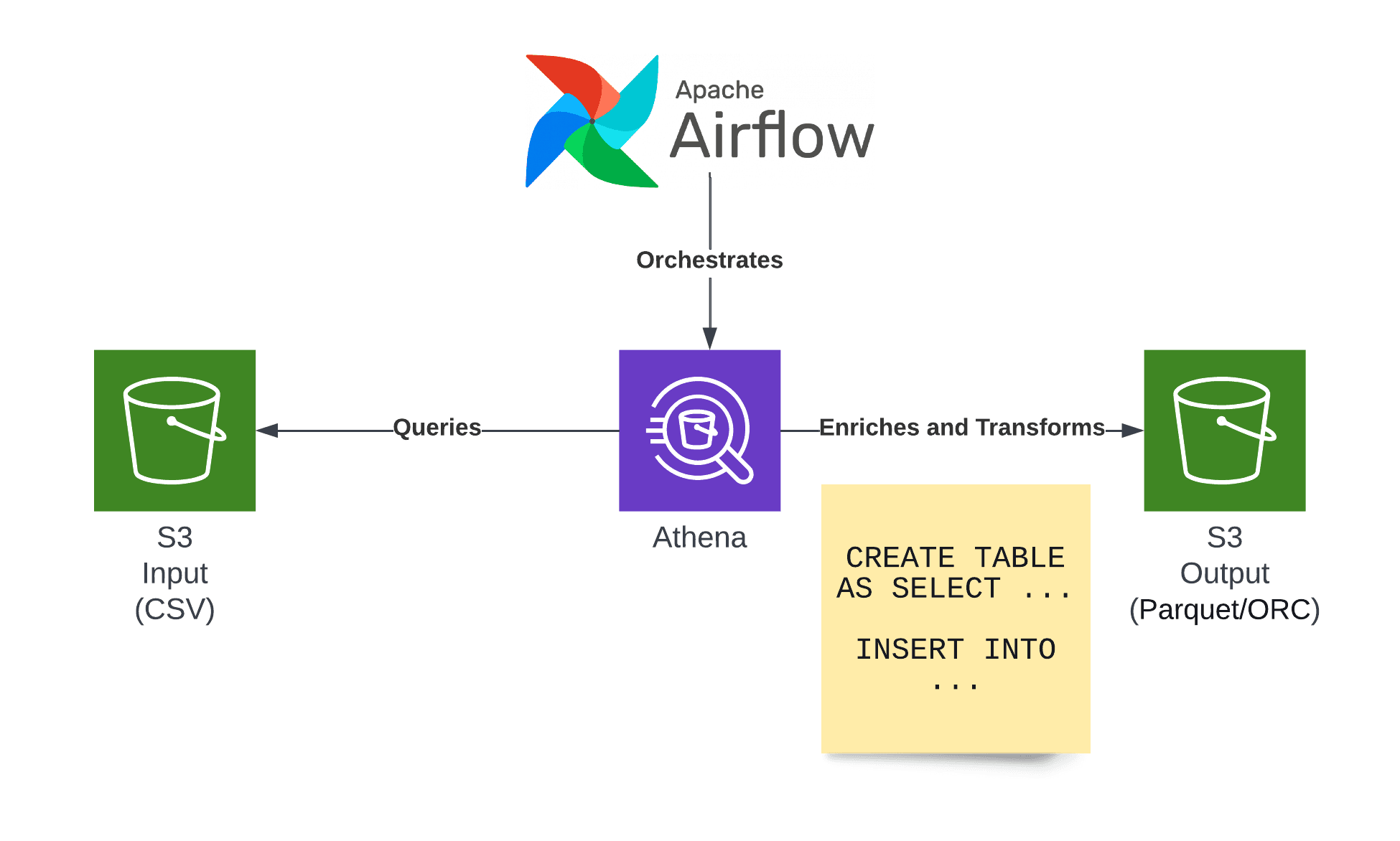

It will download data from Twitter, transform the data into a CSV file, and load the data into a Postgres database, which will serve as a data warehouse.Įxternal users or applications will be able to connect to the database to build visualizations and make policy decisions. In this guide, you will be writing an ETL data pipeline. Airflow makes it easier for organizations to manage their data, automate their workflows, and gain valuable insights from their data With Airflow, data teams can schedule, monitor, and manage the entire data workflow. Data Orchestration involves using different tools and technologies together to extract, transform, and load (ETL) data from multiple sources into a central repository.ĭata orchestration typically involves a combination of technologies such as data integration tools and data warehouses.Īpache Airflow is a tool for data orchestration.

0 kommentar(er)

0 kommentar(er)